transformation = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.1307, ), (0.3081, ))])

train_dataset = datasets.MNIST('data/', train=True, transform=transformation, download=False)

test_dataset = datasets.MNIST('data/', train=False, transform=transformation, download=False)

class CNN_bn(nn.Module):

def __init__(self):

super().__init__()

self.c1 = nn.Conv2d(1, 10, kernel_size=5)

self.c2 = nn.Conv2d(10, 20, kernel_size=5)

self.bn = nn.BatchNorm2d(20)

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.c1(x), 2))

x = F.relu(F.max_pool2d(self.bn(self.c2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x)

class CNN_do(nn.Module):

def __init__(self):

super().__init__()

self.c1 = nn.Conv2d(1, 10, kernel_size=5)

self.c2 = nn.Conv2d(10, 20, kernel_size=5)

self.c2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.c1(x), 2))

x = F.relu(F.max_pool2d(self.c2_drop(self.c2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x)

tensor([[[ 0.9066, 0.1089, -1.9883, -1.6145, 0.1072, -1.3022, -0.7888]]])

tensor([[[-0.1690, 0.6463, 0.1258, -0.8724, 0.4924]]],

grad_fn=<SqueezeBackward1>)

Parameter containing:

tensor([[[ 0.3372, -0.4791, 0.2125]]], requires_grad=True)

def fit(epoch, model, data_loader, phase='training', volatile=False):

if phase == 'training':

model.train()

if phase == 'validation':

model.eval()

volatile=True

running_loss = 0.0

running_correct = 0

for batch_idx, (data, target) in enumerate(data_loader):

if torch.cuda.is_available():

data, target = data.cuda(), target.cuda()

data, target = Variable(data, volatile), Variable(target)

if phase == 'training':

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

running_loss += F.nll_loss(output, target, size_average=False).data

preds = output.data.max(dim=1, keepdim=True)[1]

running_correct += preds.eq(target.data.view_as(preds)).cpu().sum()

if phase == 'training':

loss.backward()

optimizer.step()

loss = running_loss / len(data_loader.dataset)

global accuracy

accuracy = 100. * running_correct/len(data_loader.dataset)

print(f'{phase} loss is {loss:{5}.{2}} and {phase} accuracy is {running_correct}/{len(data_loader.dataset)}{accuracy:{10}.{4}}')

return loss, accuracy

CNN_bn(

(c1): Conv2d(1, 10, kernel_size=(5, 5), stride=(1, 1))

(c2): Conv2d(10, 20, kernel_size=(5, 5), stride=(1, 1))

(bn): BatchNorm2d(20, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc1): Linear(in_features=320, out_features=50, bias=True)

(fc2): Linear(in_features=50, out_features=10, bias=True)

)

CNN_do(

(c1): Conv2d(1, 10, kernel_size=(5, 5), stride=(1, 1))

(c2): Conv2d(10, 20, kernel_size=(5, 5), stride=(1, 1))

(c2_drop): Dropout2d(p=0.5, inplace=False)

(fc1): Linear(in_features=320, out_features=50, bias=True)

(fc2): Linear(in_features=50, out_features=10, bias=True)

)

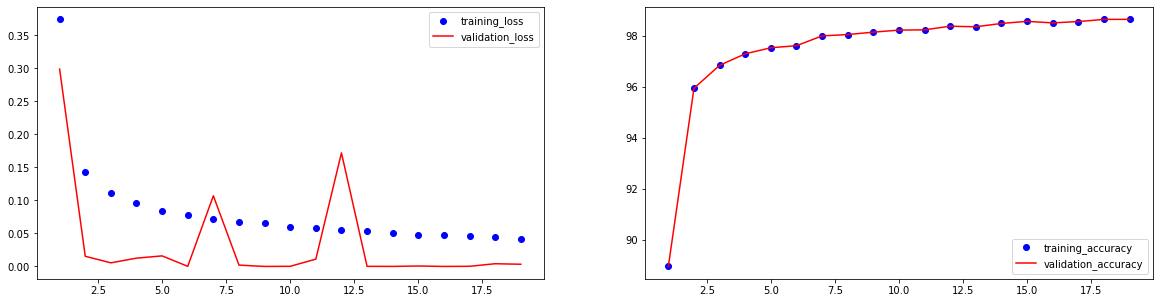

optimizer = optim.SGD(model_bn.parameters(), lr=0.01, momentum=0.5)

train_losses_bn, train_accuracy_bn = [], []

val_losses_bn, val_accuracy_bn = [], []

start_time = time.time()

for epoch in range(1, 20):

epoch_loss, epoch_accuracy = fit(epoch, model_bn, train_loader, phase='training')

val_epoch_loss, val_epoch_accuracy = fit(epoch, model_bn, test_loader, phase='validation')

train_losses_bn.append(epoch_loss)

train_accuracy_bn.append(epoch_accuracy)

val_losses_bn.append(val_epoch_loss)

val_accuracy_bn.append(val_epoch_accuracy)

print(f'{time.time() - start_time} second elapsed')

plt.figure(figsize=(20, 5))

plt.subplot(1,2,1)

plt.plot(range(1, len(train_losses_bn)+1), train_losses_bn, 'bo', label='training_loss')

plt.plot(range(1, len(val_losses_bn)+1), val_losses_bn, 'r', label='validation_loss')

plt.legend()

plt.subplot(1,2,2)

plt.plot(range(1, len(train_accuracy_bn)+1), train_accuracy_bn, 'bo', label='training_accuracy')

plt.plot(range(1, len(val_accuracy_bn)+1), val_accuracy_bn, 'r', label='validation_accuracy')

plt.legend()

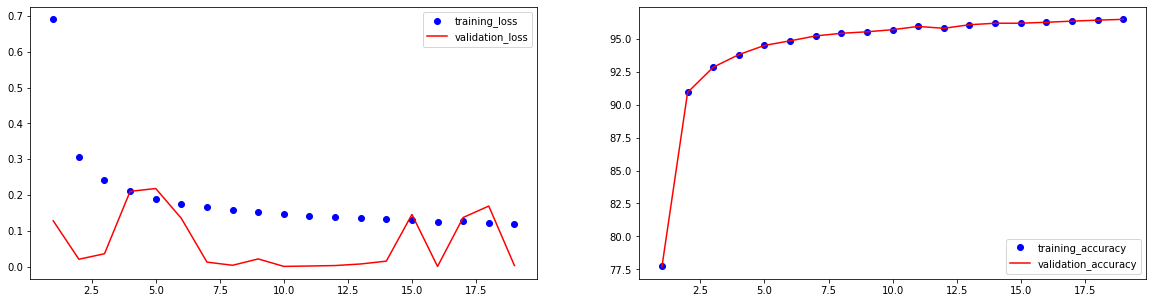

optimizer = optim.SGD(model_do.parameters(), lr=0.01, momentum=0.5)

train_losses_do, train_accuracy_do = [], []

val_losses_do, val_accuracy_do = [], []

start_time = time.time()

for epoch in range(1, 20):

epoch_loss, epoch_accuracy = fit(epoch, model_do, train_loader, phase='training')

val_epoch_loss, val_epoch_accuracy = fit(epoch, model_do, test_loader, phase='validation')

train_losses_do.append(epoch_loss)

train_accuracy_do.append(epoch_accuracy)

val_losses_do.append(val_epoch_loss)

val_accuracy_do.append(val_epoch_accuracy)

print(f'{time.time() - start_time} second elapsed')

plt.figure(figsize=(20, 5))

plt.subplot(1,2,1)

plt.plot(range(1, len(train_losses_do)+1), train_losses_do, 'bo', label='training_loss')

plt.plot(range(1, len(val_losses_do)+1), val_losses_do, 'r', label='validation_loss')

plt.legend()

plt.subplot(1,2,2)

plt.plot(range(1, len(train_accuracy_do)+1), train_accuracy_do, 'bo', label='training_accuracy')

plt.plot(range(1, len(val_accuracy_do)+1), val_accuracy_do, 'r', label='validation_accuracy')

plt.legend()