Time Series Analysis - Deep Learning for Time Series Forecasting

Deep Learning for Time Series Forecasting

import tensorflow.keras

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

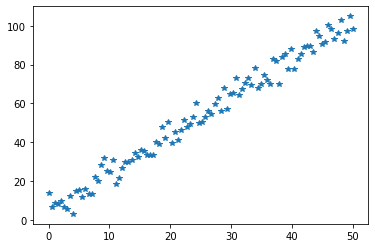

y = mx + b + noise

m = 2

b = 3

x = np.linspace(0, 50, 100)

np.random.seed(101)

noise = np.random.normal(loc=0, scale=4, size=len(x))

y = 2*x + b + noise

plt.plot(x, y, '*')

[<matplotlib.lines.Line2D at 0x1a4db22fc18>]

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

model = Sequential()

model.add(Dense(4, input_dim=1,activation='relu'))

model.add(Dense(4, activation ='relu'))

model.add(Dense(1, activation ='relu'))

model.compile(loss = 'mse')

model.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_3 (Dense) (None, 4) 8

_________________________________________________________________

dense_4 (Dense) (None, 4) 20

_________________________________________________________________

dense_5 (Dense) (None, 1) 5

=================================================================

Total params: 33

Trainable params: 33

Non-trainable params: 0

_________________________________________________________________

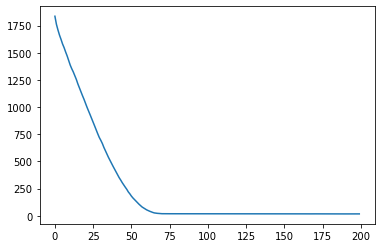

model.fit(x,y,epochs=200)

Epoch 1/200

4/4 [==============================] - 0s 1ms/step - loss: 1835.2828

Epoch 2/200

4/4 [==============================] - 0s 1ms/step - loss: 1760.2566

Epoch 3/200

4/4 [==============================] - 0s 1ms/step - loss: 1708.3179

Epoch 4/200

4/4 [==============================] - 0s 1ms/step - loss: 1661.5303

Epoch 5/200

4/4 [==============================] - 0s 1ms/step - loss: 1622.3416

Epoch 6/200

4/4 [==============================] - 0s 2ms/step - loss: 1580.1083

Epoch 7/200

4/4 [==============================] - 0s 1ms/step - loss: 1546.7878

Epoch 8/200

4/4 [==============================] - 0s 1ms/step - loss: 1506.9521

Epoch 9/200

4/4 [==============================] - 0s 1ms/step - loss: 1470.2085

Epoch 10/200

4/4 [==============================] - 0s 1ms/step - loss: 1427.7432

Epoch 11/200

4/4 [==============================] - 0s 1ms/step - loss: 1386.2589

Epoch 12/200

4/4 [==============================] - 0s 1ms/step - loss: 1352.8347

Epoch 13/200

4/4 [==============================] - 0s 1ms/step - loss: 1323.4415

Epoch 14/200

4/4 [==============================] - 0s 1ms/step - loss: 1288.4734

Epoch 15/200

4/4 [==============================] - 0s 1ms/step - loss: 1254.3790

Epoch 16/200

4/4 [==============================] - 0s 1ms/step - loss: 1213.1908

Epoch 17/200

4/4 [==============================] - 0s 1ms/step - loss: 1177.0500

Epoch 18/200

4/4 [==============================] - 0s 1ms/step - loss: 1143.3599

Epoch 19/200

4/4 [==============================] - 0s 2ms/step - loss: 1105.8571

Epoch 20/200

4/4 [==============================] - 0s 1ms/step - loss: 1072.1377

Epoch 21/200

4/4 [==============================] - 0s 1ms/step - loss: 1034.3806

Epoch 22/200

4/4 [==============================] - 0s 1ms/step - loss: 997.8810

Epoch 23/200

4/4 [==============================] - 0s 1ms/step - loss: 962.5549

Epoch 24/200

4/4 [==============================] - 0s 1ms/step - loss: 929.9268

Epoch 25/200

4/4 [==============================] - 0s 1ms/step - loss: 895.7763

Epoch 26/200

4/4 [==============================] - 0s 2ms/step - loss: 861.8133

Epoch 27/200

4/4 [==============================] - 0s 1ms/step - loss: 825.4382

Epoch 28/200

4/4 [==============================] - 0s 1ms/step - loss: 788.8192

Epoch 29/200

4/4 [==============================] - 0s 1ms/step - loss: 754.3546

Epoch 30/200

4/4 [==============================] - 0s 1ms/step - loss: 720.8946

Epoch 31/200

4/4 [==============================] - 0s 2ms/step - loss: 694.4216

Epoch 32/200

4/4 [==============================] - 0s 1ms/step - loss: 665.4741

Epoch 33/200

4/4 [==============================] - 0s 1ms/step - loss: 629.9188

Epoch 34/200

4/4 [==============================] - 0s 2ms/step - loss: 598.7121

Epoch 35/200

4/4 [==============================] - 0s 1ms/step - loss: 567.5757

Epoch 36/200

4/4 [==============================] - 0s 1ms/step - loss: 537.9247

Epoch 37/200

4/4 [==============================] - 0s 1ms/step - loss: 510.0300

Epoch 38/200

4/4 [==============================] - 0s 1ms/step - loss: 480.5556

Epoch 39/200

4/4 [==============================] - 0s 1ms/step - loss: 455.6969

Epoch 40/200

4/4 [==============================] - 0s 1ms/step - loss: 429.8373

Epoch 41/200

4/4 [==============================] - 0s 1ms/step - loss: 402.3074

Epoch 42/200

4/4 [==============================] - 0s 1ms/step - loss: 376.8388

Epoch 43/200

4/4 [==============================] - 0s 1ms/step - loss: 351.1939

Epoch 44/200

4/4 [==============================] - 0s 1ms/step - loss: 329.2676

Epoch 45/200

4/4 [==============================] - 0s 1ms/step - loss: 305.4028

Epoch 46/200

4/4 [==============================] - 0s 1ms/step - loss: 284.1473

Epoch 47/200

4/4 [==============================] - 0s 1ms/step - loss: 263.4900

Epoch 48/200

4/4 [==============================] - 0s 2ms/step - loss: 243.5607

Epoch 49/200

4/4 [==============================] - 0s 1ms/step - loss: 219.7183

Epoch 50/200

4/4 [==============================] - 0s 1ms/step - loss: 202.2714

Epoch 51/200

4/4 [==============================] - 0s 1ms/step - loss: 181.6279

Epoch 52/200

4/4 [==============================] - 0s 1ms/step - loss: 164.6638

Epoch 53/200

4/4 [==============================] - 0s 1ms/step - loss: 149.9858

Epoch 54/200

4/4 [==============================] - 0s 1ms/step - loss: 135.7605

Epoch 55/200

4/4 [==============================] - 0s 1ms/step - loss: 120.9801

Epoch 56/200

4/4 [==============================] - 0s 1ms/step - loss: 106.5698

Epoch 57/200

4/4 [==============================] - 0s 1ms/step - loss: 94.0438

Epoch 58/200

4/4 [==============================] - 0s 1ms/step - loss: 81.3139

Epoch 59/200

4/4 [==============================] - 0s 1ms/step - loss: 72.4593

Epoch 60/200

4/4 [==============================] - 0s 1ms/step - loss: 63.2077

Epoch 61/200

4/4 [==============================] - 0s 1ms/step - loss: 54.8502

Epoch 62/200

4/4 [==============================] - 0s 1ms/step - loss: 48.2167

Epoch 63/200

4/4 [==============================] - 0s 1ms/step - loss: 42.0426

Epoch 64/200

4/4 [==============================] - 0s 1ms/step - loss: 36.3565

Epoch 65/200

4/4 [==============================] - 0s 2ms/step - loss: 31.2589

Epoch 66/200

4/4 [==============================] - 0s 1ms/step - loss: 26.9042

Epoch 67/200

4/4 [==============================] - 0s 1ms/step - loss: 24.5516

Epoch 68/200

4/4 [==============================] - 0s 1ms/step - loss: 23.0759

Epoch 69/200

4/4 [==============================] - 0s 1ms/step - loss: 21.8395

Epoch 70/200

4/4 [==============================] - 0s 1ms/step - loss: 20.5880

Epoch 71/200

4/4 [==============================] - 0s 1ms/step - loss: 19.7270

Epoch 72/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4509

Epoch 73/200

4/4 [==============================] - 0s 1ms/step - loss: 19.5544

Epoch 74/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4520

Epoch 75/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4514

Epoch 76/200

4/4 [==============================] - 0s 1ms/step - loss: 19.3336

Epoch 77/200

4/4 [==============================] - 0s 1ms/step - loss: 19.2367

Epoch 78/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4528

Epoch 79/200

4/4 [==============================] - 0s 1ms/step - loss: 19.1718

Epoch 80/200

4/4 [==============================] - 0s 1ms/step - loss: 19.2670

Epoch 81/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4927

Epoch 82/200

4/4 [==============================] - 0s 1ms/step - loss: 19.2007

Epoch 83/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4057

Epoch 84/200

4/4 [==============================] - 0s 1ms/step - loss: 19.1590

Epoch 85/200

4/4 [==============================] - 0s 1ms/step - loss: 19.1011

Epoch 86/200

4/4 [==============================] - 0s 1ms/step - loss: 19.2685

Epoch 87/200

4/4 [==============================] - 0s 1ms/step - loss: 19.0871

Epoch 88/200

4/4 [==============================] - 0s 1ms/step - loss: 19.1214

Epoch 89/200

4/4 [==============================] - 0s 1ms/step - loss: 19.1207

Epoch 90/200

4/4 [==============================] - 0s 2ms/step - loss: 19.2000

Epoch 91/200

4/4 [==============================] - 0s 2ms/step - loss: 19.0114

Epoch 92/200

4/4 [==============================] - 0s 1ms/step - loss: 19.0037

Epoch 93/200

4/4 [==============================] - 0s 1ms/step - loss: 19.4451

Epoch 94/200

4/4 [==============================] - 0s 2ms/step - loss: 19.1021

Epoch 95/200

4/4 [==============================] - 0s 1ms/step - loss: 19.0582

Epoch 96/200

4/4 [==============================] - 0s 2ms/step - loss: 19.0108

Epoch 97/200

4/4 [==============================] - 0s 1ms/step - loss: 19.0246

Epoch 98/200

4/4 [==============================] - 0s 2ms/step - loss: 18.9366

Epoch 99/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9903

Epoch 100/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9929

Epoch 101/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9058

Epoch 102/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9509

Epoch 103/200

4/4 [==============================] - 0s 1ms/step - loss: 19.0329

Epoch 104/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9732

Epoch 105/200

4/4 [==============================] - 0s 1ms/step - loss: 18.7965

Epoch 106/200

4/4 [==============================] - 0s 1ms/step - loss: 18.8212

Epoch 107/200

4/4 [==============================] - 0s 1ms/step - loss: 18.7402

Epoch 108/200

4/4 [==============================] - 0s 1ms/step - loss: 18.8385

Epoch 109/200

4/4 [==============================] - 0s 1ms/step - loss: 18.8170

Epoch 110/200

4/4 [==============================] - 0s 1ms/step - loss: 18.8779

Epoch 111/200

4/4 [==============================] - 0s 997us/step - loss: 18.7897

Epoch 112/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9200

Epoch 113/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9472

Epoch 114/200

4/4 [==============================] - 0s 1ms/step - loss: 18.8327

Epoch 115/200

4/4 [==============================] - 0s 1ms/step - loss: 18.7283

Epoch 116/200

4/4 [==============================] - 0s 1ms/step - loss: 18.6641

Epoch 117/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9163

Epoch 118/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5791

Epoch 119/200

4/4 [==============================] - 0s 1ms/step - loss: 18.6373

Epoch 120/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5254

Epoch 121/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5407

Epoch 122/200

4/4 [==============================] - 0s 1ms/step - loss: 18.7938

Epoch 123/200

4/4 [==============================] - 0s 1ms/step - loss: 18.9352

Epoch 124/200

4/4 [==============================] - 0s 1ms/step - loss: 18.7772

Epoch 125/200

4/4 [==============================] - 0s 997us/step - loss: 18.5229

Epoch 126/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5174

Epoch 127/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4520

Epoch 128/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4360

Epoch 129/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4862

Epoch 130/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5956

Epoch 131/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5477

Epoch 132/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5845

Epoch 133/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3726

Epoch 134/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4655

Epoch 135/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3289

Epoch 136/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3460

Epoch 137/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3917

Epoch 138/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3464

Epoch 139/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4719

Epoch 140/200

4/4 [==============================] - 0s 1ms/step - loss: 18.6031

Epoch 141/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4234

Epoch 142/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3097

Epoch 143/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2677

Epoch 144/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3238

Epoch 145/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2502

Epoch 146/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3489

Epoch 147/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2561

Epoch 148/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2409

Epoch 149/200

4/4 [==============================] - 0s 1ms/step - loss: 18.4153

Epoch 150/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1522

Epoch 151/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1873

Epoch 152/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2808

Epoch 153/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1157

Epoch 154/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1804

Epoch 155/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3109

Epoch 156/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3864

Epoch 157/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3333

Epoch 158/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3745

Epoch 159/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3049

Epoch 160/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1015

Epoch 161/200

4/4 [==============================] - 0s 1ms/step - loss: 18.0976

Epoch 162/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1385

Epoch 163/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1505

Epoch 164/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1945

Epoch 165/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2722

Epoch 166/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9870

Epoch 167/200

4/4 [==============================] - ETA: 0s - loss: 18.00 - 0s 1ms/step - loss: 18.0277

Epoch 168/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1463

Epoch 169/200

4/4 [==============================] - 0s 1ms/step - loss: 18.5038

Epoch 170/200

4/4 [==============================] - 0s 2ms/step - loss: 18.1617

Epoch 171/200

4/4 [==============================] - 0s 1ms/step - loss: 18.3358

Epoch 172/200

4/4 [==============================] - 0s 1ms/step - loss: 18.2571

Epoch 173/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9925

Epoch 174/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9974

Epoch 175/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1721

Epoch 176/200

4/4 [==============================] - 0s 1ms/step - loss: 18.0019

Epoch 177/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9564

Epoch 178/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9195

Epoch 179/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9515

Epoch 180/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9477

Epoch 181/200

4/4 [==============================] - 0s 1ms/step - loss: 17.8825

Epoch 182/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9276

Epoch 183/200

4/4 [==============================] - 0s 2ms/step - loss: 17.9231

Epoch 184/200

4/4 [==============================] - 0s 1ms/step - loss: 18.0652

Epoch 185/200

4/4 [==============================] - 0s 2ms/step - loss: 17.9978

Epoch 186/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9539

Epoch 187/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9550

Epoch 188/200

4/4 [==============================] - 0s 1ms/step - loss: 17.8413

Epoch 189/200

4/4 [==============================] - 0s 1ms/step - loss: 18.0824

Epoch 190/200

4/4 [==============================] - 0s 1ms/step - loss: 17.8459

Epoch 191/200

4/4 [==============================] - 0s 1ms/step - loss: 17.7732

Epoch 192/200

4/4 [==============================] - 0s 1ms/step - loss: 17.7758

Epoch 193/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9182

Epoch 194/200

4/4 [==============================] - 0s 1ms/step - loss: 17.7538

Epoch 195/200

4/4 [==============================] - 0s 1ms/step - loss: 17.8182

Epoch 196/200

4/4 [==============================] - 0s 1ms/step - loss: 17.7276

Epoch 197/200

4/4 [==============================] - 0s 1ms/step - loss: 17.9054

Epoch 198/200

4/4 [==============================] - 0s 1ms/step - loss: 18.1024

Epoch 199/200

4/4 [==============================] - 0s 1ms/step - loss: 17.7250

Epoch 200/200

4/4 [==============================] - 0s 2ms/step - loss: 17.9618

<tensorflow.python.keras.callbacks.History at 0x1a4db1a3390>

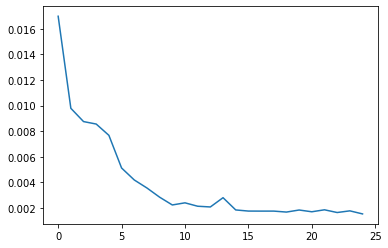

loss = model.history.history['loss']

epochs = range(len(loss))

plt.plot(epochs, loss)

[<matplotlib.lines.Line2D at 0x1a4dcfc7320>]

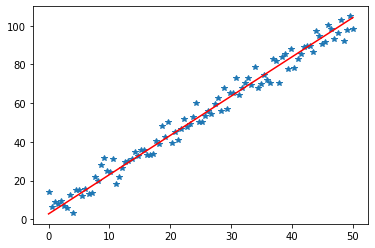

x_for_predictions = np.linspace(0, 50, 100)

y_pred = model.predict(x_for_predictions)

y_pred

array([[ 2.7469127],

[ 3.77153 ],

[ 4.796148 ],

[ 5.820765 ],

[ 6.8453813],

[ 7.8699994],

[ 8.894616 ],

[ 9.919232 ],

[ 10.94385 ],

[ 11.968469 ],

[ 12.993085 ],

[ 14.017701 ],

[ 15.042319 ],

[ 16.066938 ],

[ 17.091555 ],

[ 18.116175 ],

[ 19.140789 ],

[ 20.165407 ],

[ 21.190025 ],

[ 22.214643 ],

[ 23.23926 ],

[ 24.263876 ],

[ 25.288492 ],

[ 26.313112 ],

[ 27.337729 ],

[ 28.362345 ],

[ 29.386963 ],

[ 30.411581 ],

[ 31.436197 ],

[ 32.46081 ],

[ 33.48543 ],

[ 34.510048 ],

[ 35.534664 ],

[ 36.559277 ],

[ 37.5839 ],

[ 38.608517 ],

[ 39.633137 ],

[ 40.65775 ],

[ 41.682373 ],

[ 42.70699 ],

[ 43.7316 ],

[ 44.75622 ],

[ 45.780834 ],

[ 46.805458 ],

[ 47.83007 ],

[ 48.854683 ],

[ 49.879303 ],

[ 50.90392 ],

[ 51.92854 ],

[ 52.953156 ],

[ 53.977776 ],

[ 55.00239 ],

[ 56.02701 ],

[ 57.05163 ],

[ 58.076244 ],

[ 59.10086 ],

[ 60.125477 ],

[ 61.15009 ],

[ 62.17471 ],

[ 63.199333 ],

[ 64.22395 ],

[ 65.248566 ],

[ 66.273186 ],

[ 67.2978 ],

[ 68.32242 ],

[ 69.34703 ],

[ 70.37166 ],

[ 71.39627 ],

[ 72.42089 ],

[ 73.4455 ],

[ 74.470116 ],

[ 75.49474 ],

[ 76.51936 ],

[ 77.543976 ],

[ 78.568596 ],

[ 79.59321 ],

[ 80.617836 ],

[ 81.64245 ],

[ 82.66706 ],

[ 83.69168 ],

[ 84.7163 ],

[ 85.74092 ],

[ 86.765526 ],

[ 87.79015 ],

[ 88.814766 ],

[ 89.83939 ],

[ 90.864006 ],

[ 91.88862 ],

[ 92.91323 ],

[ 93.93785 ],

[ 94.96246 ],

[ 95.98709 ],

[ 97.0117 ],

[ 98.03632 ],

[ 99.060936 ],

[100.085556 ],

[101.11017 ],

[102.134796 ],

[103.1594 ],

[104.18403 ]], dtype=float32)

plt.plot(x,y, '*')

plt.plot(x_for_predictions, y_pred, 'r')

[<matplotlib.lines.Line2D at 0x1a4dd057f28>]

from sklearn.metrics import mean_squared_error

from statsmodels.tools.eval_measures import mse

print(mean_squared_error(y,y_pred))

17.764145266496467

a = [1,2,3,4,5]

b = [2,3,4,5,6]

mean_squared_error(a,b)

1.0

mse(a,b)

1.0

y_pred_reshape = y_pred.reshape(100, )

y_pred_reshape.shape

(100,)

mse(y, y_pred_reshape)

17.764145266496467

Recurrent Neural Network

import pandas as pd

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

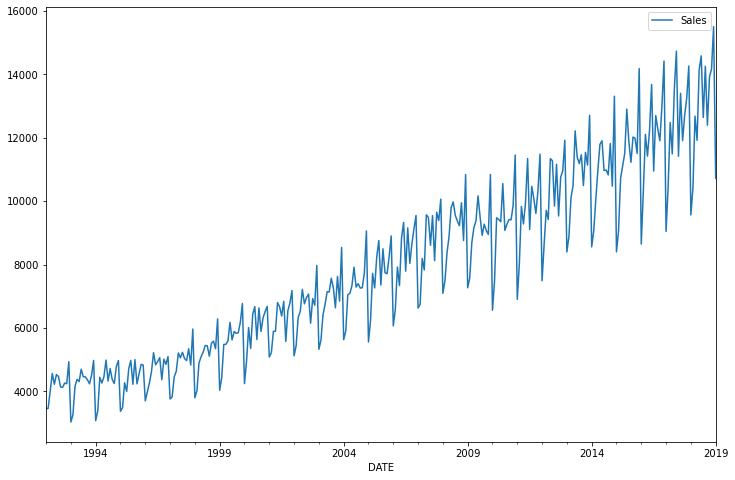

df = pd.read_csv('Data/Alcohol_Sales.csv',index_col='DATE',parse_dates=True)

df.index.freq = 'MS'

df.head()

| S4248SM144NCEN | |

|---|---|

| DATE | |

| 1992-01-01 | 3459 |

| 1992-02-01 | 3458 |

| 1992-03-01 | 4002 |

| 1992-04-01 | 4564 |

| 1992-05-01 | 4221 |

df.columns = ['Sales']

df.plot(figsize=(12,8))

<AxesSubplot:xlabel='DATE'>

from statsmodels.tsa.seasonal import seasonal_decompose

results = seasonal_decompose(df['Sales'])

results.seasonal #plot(figsize=(12,8))

DATE

1992-01-01 -1836.088354

1992-02-01 -1321.328738

1992-03-01 -148.299892

1992-04-01 -240.372008

1992-05-01 554.966133

...

2018-09-01 -109.224572

2018-10-01 260.730557

2018-11-01 258.666454

2018-12-01 1303.892416

2019-01-01 -1836.088354

Freq: MS, Name: seasonal, Length: 325, dtype: float64

len(df)

325

nobs = 12

train = df.iloc[:-nobs]

test = df.iloc[-nobs:]

ser = np.array([23,56,2,13,14])

ser.max()

56

ser / ser.max()

array([0.41071429, 1. , 0.03571429, 0.23214286, 0.25 ])

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

scaler.fit(train) # finds the max value in train data

MinMaxScaler()

scaled_train = scaler.transform(train)

scaled_test = scaler.transform(test)

from tensorflow.keras.preprocessing.sequence import TimeseriesGenerator

n_input = 3

n_features = 1

generator = TimeseriesGenerator(scaled_train, scaled_train,length=n_input,batch_size=1)

len(scaled_train)

313

len(generator)

310

X,y = generator[0]

print(X)

print(y)

[[[0.03658432]

[0.03649885]

[0.08299855]]]

[[0.13103684]]

X.shape

(1, 3, 1)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

n_input = 12

n_features = 1

train_generator = TimeseriesGenerator(scaled_train, scaled_train,length=n_input,batch_size=1)

model = Sequential()

model.add(LSTM(150, activation = 'relu', input_shape=(n_input, n_features)))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

WARNING:tensorflow:Layer lstm_1 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

model.summary()

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_1 (LSTM) (None, 150) 91200

_________________________________________________________________

dense_7 (Dense) (None, 1) 151

=================================================================

Total params: 91,351

Trainable params: 91,351

Non-trainable params: 0

_________________________________________________________________

model.fit_generator(train_generator, epochs=25)

WARNING:tensorflow:From <ipython-input-83-698d8e58e24d>:1: Model.fit_generator (from tensorflow.python.keras.engine.training) is deprecated and will be removed in a future version.

Instructions for updating:

Please use Model.fit, which supports generators.

Epoch 1/25

301/301 [==============================] - 5s 18ms/step - loss: 0.0170

Epoch 2/25

301/301 [==============================] - 5s 18ms/step - loss: 0.0098

Epoch 3/25

301/301 [==============================] - 5s 18ms/step - loss: 0.0087

Epoch 4/25

301/301 [==============================] - 5s 18ms/step - loss: 0.0086

Epoch 5/25

301/301 [==============================] - 5s 18ms/step - loss: 0.0077

Epoch 6/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0051

Epoch 7/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0042

Epoch 8/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0036

Epoch 9/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0028

Epoch 10/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0022

Epoch 11/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0024

Epoch 12/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0021

Epoch 13/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0021

Epoch 14/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0028: 0s - lo

Epoch 15/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0018

Epoch 16/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0017: 0s - l

Epoch 17/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0017

Epoch 18/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0017

Epoch 19/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0017

Epoch 20/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0018

Epoch 21/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0017

Epoch 22/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0018

Epoch 23/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0016

Epoch 24/25

301/301 [==============================] - 5s 15ms/step - loss: 0.0018

Epoch 25/25

301/301 [==============================] - 5s 16ms/step - loss: 0.0015

<tensorflow.python.keras.callbacks.History at 0x1a5fca6e0f0>

model.history.history.keys()

dict_keys(['loss'])

plt.plot(range(len(model.history.history['loss'])),model.history.history['loss'])

[<matplotlib.lines.Line2D at 0x1a5ffd1dda0>]

# 12 history steps ---> step 13

# Last 12 points train --> point 1 of test data

first_eval_batch = scaled_train[-12:]

first_eval_batch

array([[0.63432772],

[0.80776135],

[0.72313873],

[0.89870929],

[1. ],

[0.71672793],

[0.88648602],

[0.75869732],

[0.82742115],

[0.87443371],

[0.96025301],

[0.5584238 ]])

first_eval_batch = first_eval_batch.reshape((1,n_input, n_features))

model.predict(first_eval_batch)

array([[0.7314653]], dtype=float32)

FORECAST USING RNN MODEL

test_predictions = []

first_eval_batch = scaled_train[-n_input:]

current_batch = first_eval_batch.reshape((1, n_input, n_features))

for i in range(len(test)):

current_pred = model.predict(current_batch)[0]

test_predictions.append(current_pred)

current_batch = np.append(current_batch[:,1:,:],[[current_pred]],axis=1)

test_predictions

[array([0.7314653], dtype=float32),

array([0.8324642], dtype=float32),

array([0.8028721], dtype=float32),

array([0.94515663], dtype=float32),

array([1.008543], dtype=float32),

array([0.79361135], dtype=float32),

array([0.9141835], dtype=float32),

array([0.8050848], dtype=float32),

array([0.87166405], dtype=float32),

array([0.91545624], dtype=float32),

array([0.97476], dtype=float32),

array([0.66045696], dtype=float32)]

true_predictions = scaler.inverse_transform(test_predictions)

true_predictions

array([[11588.41231138],

[12769.99888802],

[12423.80094749],

[14088.38745946],

[14829.94472694],

[12315.45915645],

[13726.03273642],

[12449.68736255],

[13228.59768867],

[13740.92249805],

[14434.71719247],

[10757.68592161]])

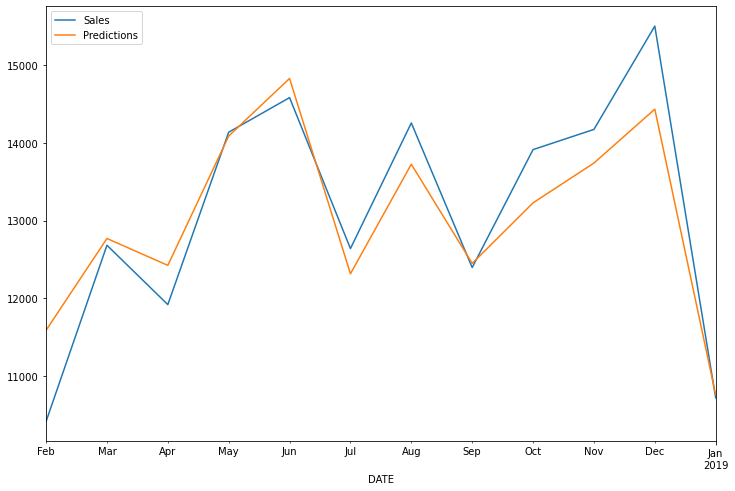

test['Predictions'] = true_predictions

C:\Users\ilvna\.conda\envs\tf-2.3\lib\site-packages\ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

"""Entry point for launching an IPython kernel.

test

| Sales | Predictions | |

|---|---|---|

| DATE | ||

| 2018-02-01 | 10415 | 11588.412311 |

| 2018-03-01 | 12683 | 12769.998888 |

| 2018-04-01 | 11919 | 12423.800947 |

| 2018-05-01 | 14138 | 14088.387459 |

| 2018-06-01 | 14583 | 14829.944727 |

| 2018-07-01 | 12640 | 12315.459156 |

| 2018-08-01 | 14257 | 13726.032736 |

| 2018-09-01 | 12396 | 12449.687363 |

| 2018-10-01 | 13914 | 13228.597689 |

| 2018-11-01 | 14174 | 13740.922498 |

| 2018-12-01 | 15504 | 14434.717192 |

| 2019-01-01 | 10718 | 10757.685922 |

test.plot(figsize=(12,8))

<AxesSubplot:xlabel='DATE'>

model.save('mycoolmodel.h5')

pwd

'C:\\Users\\ilvna\\Udemy'

from tensorflow.keras.models import load_model

new_model = load_model('mycoolmodel.h5')

WARNING:tensorflow:Layer lstm_1 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

new_model.summary()

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_1 (LSTM) (None, 150) 91200

_________________________________________________________________

dense_7 (Dense) (None, 1) 151

=================================================================

Total params: 91,351

Trainable params: 91,351

Non-trainable params: 0

_________________________________________________________________