Time Series Forecasting using TensorFlow and Deep Hybrid Learning

Imports

import pandas as pd

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

Data

df = pd.read_csv('susnpot.csv', index_col = 0)

df

| Date | Monthly Mean Total Sunspot Number | |

|---|---|---|

| 0 | 1749-01-31 | 96.7 |

| 1 | 1749-02-28 | 104.3 |

| 2 | 1749-03-31 | 116.7 |

| 3 | 1749-04-30 | 92.8 |

| 4 | 1749-05-31 | 141.7 |

| ... | ... | ... |

| 3230 | 2018-03-31 | 2.5 |

| 3231 | 2018-04-30 | 8.9 |

| 3232 | 2018-05-31 | 13.2 |

| 3233 | 2018-06-30 | 15.9 |

| 3234 | 2018-07-31 | 1.6 |

3235 rows × 2 columns

Preprocessing

time_idx = np.array(df['Date'])

data = np.array(df['Monthly Mean Total Sunspot Number'])

split_ratio = 0.8

window_size = 60

batch_size = 32

shuffle_buffer = 1000

split_idx = int(split_ratio * data.shape[0])

split_idx

2588

train_data = data[:split_idx]

train_time = data[:split_idx]

test_data = data[split_idx:]

test_time = data[split_idx:]

print(len(train_data), len(test_data))

2588 647

def ts_data_generator(data, window_size, batch_size, shuffle_buffer):

ts_data = tf.data.Dataset.from_tensor_slices(data)

ts_data = ts_data.window(window_size + 1, shift=1, drop_remainder=True)

ts_data = ts_data.flat_map(lambda window: window.batch(window_size + 1))

ts_data = ts_data.shuffle(shuffle_buffer).map(lambda window: (window[:-1], window[-1]))

ts_data = ts_data.batch(batch_size).prefetch(1)

return ts_data

tensor_train_data = tf.expand_dims(train_data, axis=-1)

tensor_test_data = tf.expand_dims(test_data, axis=-1)

tensor_train_dataset = ts_data_generator(tensor_train_data, window_size, batch_size, shuffle_buffer)

tensor_test_dataset = ts_data_generator(tensor_test_data, window_size, batch_size, shuffle_buffer)

Models

from tensorflow.keras.layers import Input, Conv1D, LSTM, Dense

from tensorflow.keras.models import Model

from tensorflow.keras.callbacks import EarlyStopping

optimizer = tf.keras.optimizers.SGD(lr = 1e-4, momentum=0.9)

es = EarlyStopping(monitor='val_loss', patience=15)

DHL

inputs = Input(shape=[None, 1])

d = Conv1D(filters=32, kernel_size=5, strides=1, padding='causal', activation='relu')(inputs)

d = LSTM(64, return_sequences=True)(d)

d = LSTM(64, return_sequences=True)(d)

d = Dense(30, activation='relu')(d)

d = Dense(10, activation='relu')(d)

d = Dense(1, activation='relu')(d)

dhl_huber = Model(inputs, d)

dhl_mae = Model(inputs, d)

Simple LSTM

inputs = Input(shape=[None, 1])

x = LSTM(64, return_sequences=True)(inputs)

x = LSTM(64, return_sequences=True)(x)

x = Dense(30, activation='relu')(x)

x = Dense(10, activation='relu')(x)

x = Dense(1, activation='relu')(x)

lstm_huber = Model(inputs, x)

lstm_mae = Model(inputs, x)

Compile

dhl_huber.compile(loss = tf.keras.losses.Huber(), optimizer=optimizer, metrics=['mae'])

dhl_mae.compile(loss = tf.keras.losses.MAE, optimizer=optimizer, metrics=['mae'])

lstm_huber.compile(loss = tf.keras.losses.Huber(), optimizer=optimizer, metrics=['mae'])

lstm_mae.compile(loss = tf.keras.losses.MAE, optimizer=optimizer, metrics=['mae'])

hist_dhl_huber = dhl_huber.fit(tensor_train_dataset, epochs=200, validation_data=tensor_test_dataset, callbacks=[es], verbose=0)

hist_dhl_mae = dhl_mae.fit(tensor_train_dataset, epochs=200, validation_data=tensor_test_dataset, callbacks=[es], verbose=0)

hist_lstm_huber = lstm_huber.fit(tensor_train_dataset, epochs=200, validation_data=tensor_test_dataset, callbacks=[es], verbose=0)

hist_lstm_mae = lstm_mae.fit(tensor_train_dataset, epochs=200, validation_data=tensor_test_dataset, callbacks=[es], verbose=0)

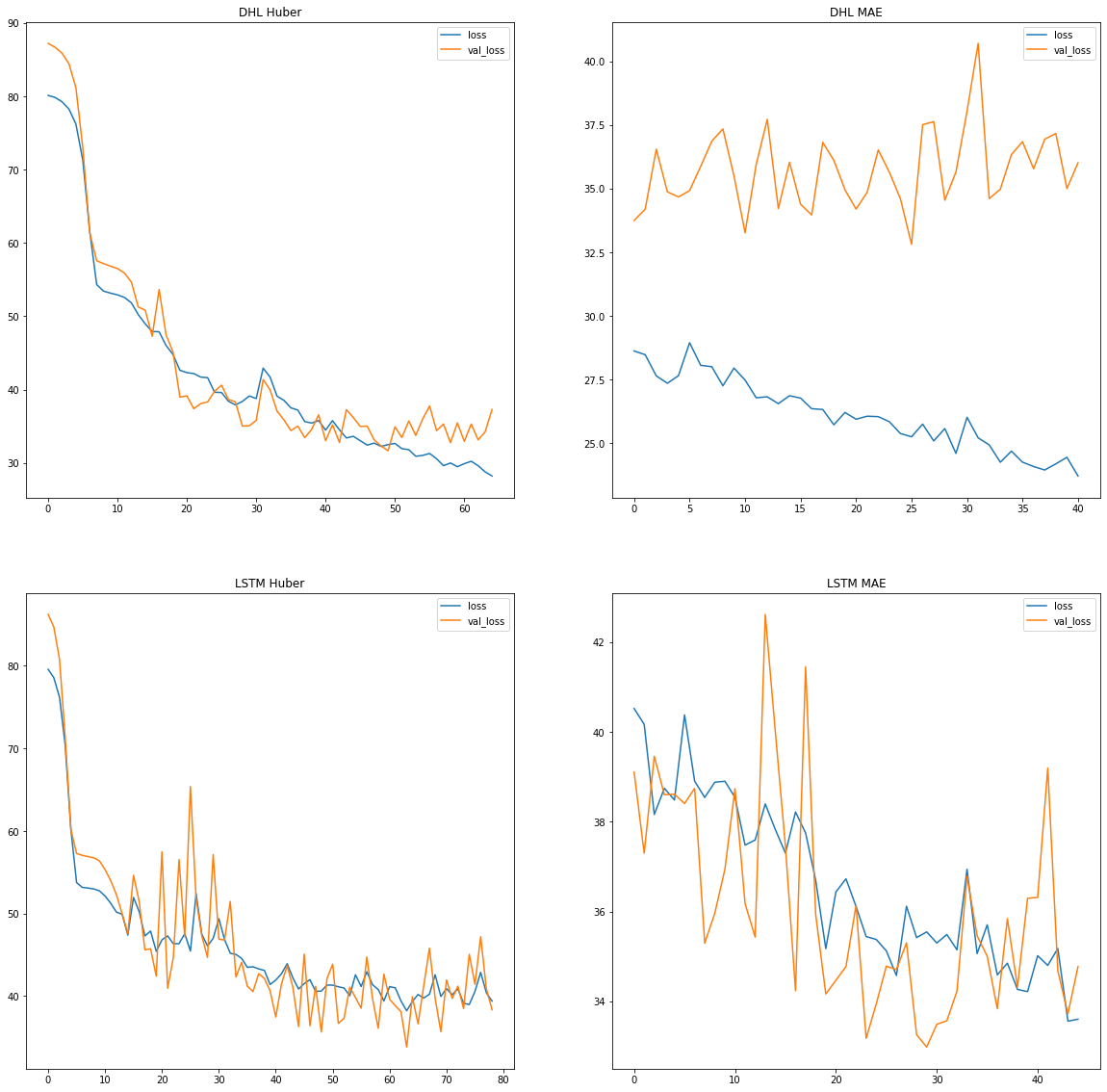

plt.figure(figsize=(20, 20))

plt.subplot(2,2,1)

plt.plot(hist_dhl_huber.history['loss'], label='loss')

plt.plot(hist_dhl_huber.history['val_loss'], label='val_loss')

plt.title('DHL Huber')

plt.legend()

plt.subplot(2,2,2)

plt.plot(hist_dhl_mae.history['loss'], label='loss')

plt.plot(hist_dhl_mae.history['val_loss'], label='val_loss')

plt.title('DHL MAE')

plt.legend()

plt.subplot(2,2,3)

plt.plot(hist_lstm_huber.history['loss'], label='loss')

plt.plot(hist_lstm_huber.history['val_loss'], label='val_loss')

plt.title('LSTM Huber')

plt.legend()

plt.subplot(2,2,4)

plt.plot(hist_lstm_mae.history['loss'], label='loss')

plt.plot(hist_lstm_mae.history['val_loss'], label='val_loss')

plt.title('LSTM MAE')

plt.legend()

<matplotlib.legend.Legend at 0x2ce6d4c8d48>

Forecasting

def model_forecasting(model, data, window_size):

ds = tf.data.Dataset.from_tensor_slices(data)

ds = ds.window(window_size, shift=1, drop_remainder=True)

ds = ds.flat_map(lambda w: w.batch(window_size))

ds = ds.batch(32).prefetch(1)

forecast = model.predict(ds)

return forecast

forecast_dhl = model_forecasting(dhl_huber, data[..., np.newaxis], window_size)

forecast_dhl = forecast_dhl[split_idx - window_size: -1, -1, 0]

forecast_lstm = model_forecasting(lstm_huber, data[..., np.newaxis], window_size)

forecast_lstm = forecast_lstm[split_idx - window_size: -1, -1, 0]

error_dhl = tf.keras.metrics.mean_absolute_error(test_data, forecast_dhl).numpy()

error_lstm = tf.keras.metrics.mean_absolute_error(test_data, forecast_lstm).numpy()

print(error_dhl, error_lstm)

26.230343 22.918995

Visualization

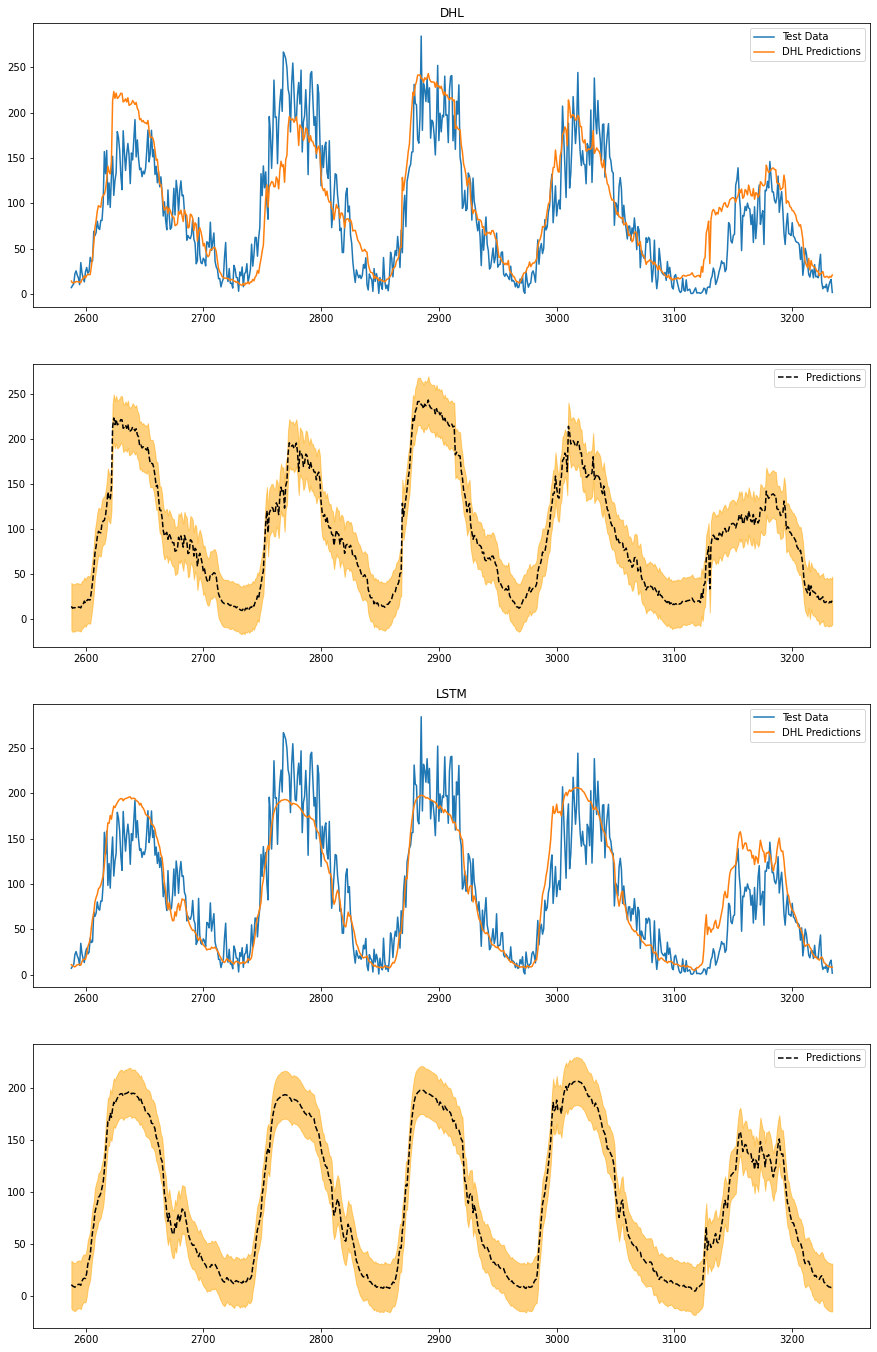

plt.figure(figsize=(15,24))

plt.subplot(4,1,1)

plt.plot(list(range(split_idx, len(data))), test_data, label='Test Data')

plt.plot(list(range(split_idx, len(data))), forecast_dhl, label='DHL Predictions')

plt.title('DHL')

plt.legend()

plt.subplot(4,1,2)

plt.plot(list(range(split_idx, len(data))), forecast_dhl, label='Predictions', color='k', linestyle = '--')

plt.fill_between(range(split_idx, len(data)), forecast_dhl - error_dhl, forecast_dhl + error_dhl, alpha = 0.5, color='orange')

plt.legend()

plt.subplot(4,1,3)

plt.plot(list(range(split_idx, len(data))), test_data, label='Test Data')

plt.plot(list(range(split_idx, len(data))), forecast_lstm, label='DHL Predictions')

plt.title('LSTM')

plt.legend()

plt.subplot(4,1,4)

plt.plot(list(range(split_idx, len(data))), forecast_lstm, label='Predictions', color='k', linestyle = '--')

plt.fill_between(range(split_idx, len(data)), forecast_lstm - error_lstm, forecast_lstm + error_lstm, alpha = 0.5, color='orange')

plt.legend()

<matplotlib.legend.Legend at 0x2ce77610948>

Reference : Time Series Forecasting using TensorFlow and Deep Hybrid Learning