Colorization Autoencoder

Colorization Autoencoder

from tensorflow.keras.layers import Dense, Input, Conv2D, Flatten, Reshape, Conv2DTranspose

from tensorflow.keras.models import Model

from tensorflow.keras.callbacks import ReduceLROnPlateau, ModelCheckpoint

from tensorflow.keras.datasets import cifar10

from tensorflow.keras.utils import plot_model

from tensorflow.keras import backend as K

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import os

Dataset

def rbg2gray(rgb):

return np.dot(rgb[...,:3], [0.299, 0.587, 0.114])

(x_train, _), (x_test, _) = cifar10.load_data()

img_rows = x_train.shape[1]

img_cols = x_train.shape[2]

channels = x_train.shape[3]

imgs_dir = 'saved_images'

save_dir = os.path.join(os.getcwd(), imgs_dir)

if not os.path.isdir(save_dir):

os.makedirs(save_dir)

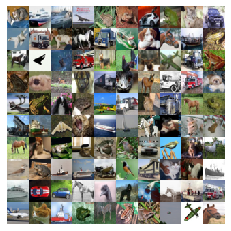

imgs = x_test[:100]

imgs = imgs.reshape((10, 10, img_rows, img_cols, channels))

imgs = np.vstack([np.hstack(i) for i in imgs])

plt.figure()

plt.axis('off')

plt.imshow(imgs, interpolation='none')

plt.show()

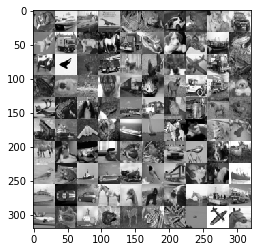

x_train_gray = rbg2gray(x_train)

x_test_gray = rbg2gray(x_test)

imgs = x_test_gray[:100]

imgs = imgs.reshape((10, 10, img_rows, img_cols))

imgs = np.vstack([np.hstack(i) for i in imgs])

plt.figure()

plt.imshow(imgs, interpolation='none', cmap='gray')

plt.show()

x_train = x_train.astype('float32') / 255

x_test = x_test.astype('float32') / 255

x_train_gray = x_train_gray.astype('float32') / 255

x_test_gray = x_test_gray.astype('float32') / 255

x_train = x_train.reshape(x_train.shape[0], img_rows, img_cols, channels)

x_test = x_test.reshape(x_test.shape[0], img_rows, img_cols, channels)

x_train_gray = x_train_gray.reshape(x_train_gray.shape[0], img_rows, img_cols, 1)

x_test_gray = x_test_gray.reshape(x_test_gray.shape[0], img_rows, img_cols, 1)

Model

Hyperparameter

input_shape = (img_rows, img_cols, 1)

batch_size = 32

kernel_size = 3

latent_dim = 256

layer_filters = [64, 128, 256]

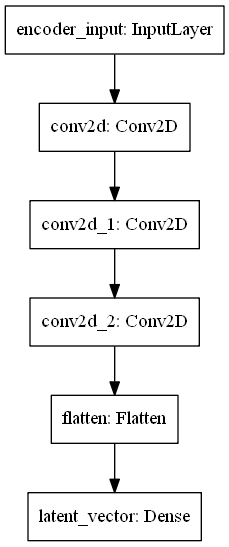

Encoder

inputs = Input(shape = input_shape, name='encoder_input')

x = inputs

for filters in layer_filters:

x = Conv2D(filters=filters, kernel_size=kernel_size, strides=2, activation='relu', padding='same')(x)

shape = K.int_shape(x)

x = Flatten()(x)

latent = Dense(latent_dim, name='latent_vector')(x)

encoder = Model(inputs, latent, name='encoder')

encoder.summary()

Model: "encoder"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

encoder_input (InputLayer) [(None, 32, 32, 1)] 0

_________________________________________________________________

conv2d (Conv2D) (None, 16, 16, 64) 640

_________________________________________________________________

conv2d_1 (Conv2D) (None, 8, 8, 128) 73856

_________________________________________________________________

conv2d_2 (Conv2D) (None, 4, 4, 256) 295168

_________________________________________________________________

flatten (Flatten) (None, 4096) 0

_________________________________________________________________

latent_vector (Dense) (None, 256) 1048832

=================================================================

Total params: 1,418,496

Trainable params: 1,418,496

Non-trainable params: 0

_________________________________________________________________

plot_model(encoder)

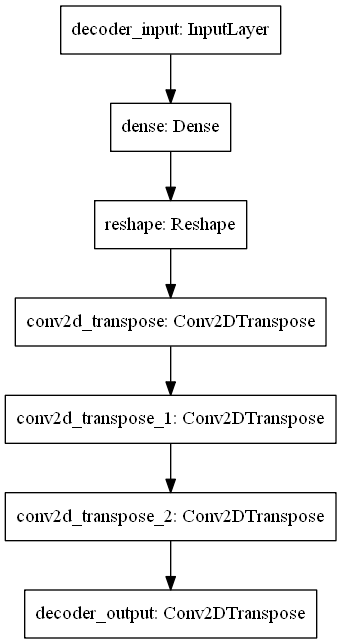

Decoder

latent_inputs = Input(shape=(latent_dim, ), name='decoder_input')

x = Dense(shape[1]*shape[2]*shape[3])(latent_inputs)

x = Reshape((shape[1], shape[2], shape[3]))(x)

for filters in layer_filters[::-1]:

x = Conv2DTranspose(filters=filters, kernel_size=kernel_size, strides=2, activation='relu', padding='same')(x)

outputs = Conv2DTranspose(filters=channels, kernel_size=kernel_size, activation='sigmoid',padding='same',name='decoder_output')(x)

decoder = Model(latent_inputs, outputs, name='decoder')

decoder.summary()

Model: "decoder"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

decoder_input (InputLayer) [(None, 256)] 0

_________________________________________________________________

dense (Dense) (None, 4096) 1052672

_________________________________________________________________

reshape (Reshape) (None, 4, 4, 256) 0

_________________________________________________________________

conv2d_transpose (Conv2DTran (None, 8, 8, 256) 590080

_________________________________________________________________

conv2d_transpose_1 (Conv2DTr (None, 16, 16, 128) 295040

_________________________________________________________________

conv2d_transpose_2 (Conv2DTr (None, 32, 32, 64) 73792

_________________________________________________________________

decoder_output (Conv2DTransp (None, 32, 32, 3) 1731

=================================================================

Total params: 2,013,315

Trainable params: 2,013,315

Non-trainable params: 0

_________________________________________________________________

plot_model(decoder)

Autoencoder

AE = Model(inputs, decoder(encoder(inputs)), name='autoencoder')

AE.summary()

Model: "autoencoder"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

encoder_input (InputLayer) [(None, 32, 32, 1)] 0

_________________________________________________________________

encoder (Functional) (None, 256) 1418496

_________________________________________________________________

decoder (Functional) (None, 32, 32, 3) 2013315

=================================================================

Total params: 3,431,811

Trainable params: 3,431,811

Non-trainable params: 0

_________________________________________________________________

save_dir = os.path.join(os.getcwd(), 'saved_models')

model_name = 'colorized_ae_model.{epoch:03d}.h5'

if not os.path.isdir(save_dir):

os.makedirs(save_dir)

filepath = os.path.join(save_dir, model_name)

손실이 5epochs 내에 개선되지 않으면 학습속도를 sqrt(0.1)을 사용해 감소

lr_reducer = ReduceLROnPlateau(factor=np.sqrt(0.1),

cooldown=0, patience=5, verbose=1, min_lr=0.5e-6)

checkpoint = ModelCheckpoint(filepath=filepath, monitor='val_loss', verbose=1, save_best_only=True)

AE.compile(loss='mse', optimizer='adam')

callbacks = [lr_reducer, checkpoint]

AE.fit(x_train_gray, x_train, validation_data=(x_test_gray, x_test), epochs=30, batch_size=batch_size, callbacks=callbacks)

Epoch 1/30

1563/1563 [==============================] - ETA: 0s - loss: 0.0157

Epoch 00001: val_loss improved from inf to 0.01145, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.001.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0157 - val_loss: 0.0114

Epoch 2/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0102

Epoch 00002: val_loss improved from 0.01145 to 0.00942, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.002.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0102 - val_loss: 0.0094

Epoch 3/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0092

Epoch 00003: val_loss improved from 0.00942 to 0.00894, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.003.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0092 - val_loss: 0.0089

Epoch 4/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0087

Epoch 00004: val_loss improved from 0.00894 to 0.00858, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.004.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0087 - val_loss: 0.0086

Epoch 5/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0083

Epoch 00005: val_loss improved from 0.00858 to 0.00817, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.005.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0083 - val_loss: 0.0082

Epoch 6/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0080

Epoch 00006: val_loss improved from 0.00817 to 0.00792, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.006.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0080 - val_loss: 0.0079

Epoch 7/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0077

Epoch 00007: val_loss did not improve from 0.00792

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0077 - val_loss: 0.0081

Epoch 8/30

1562/1563 [============================>.] - ETA: 0s - loss: 0.0075

Epoch 00008: val_loss did not improve from 0.00792

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0075 - val_loss: 0.0080

Epoch 9/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0073

Epoch 00009: val_loss improved from 0.00792 to 0.00772, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.009.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0073 - val_loss: 0.0077

Epoch 10/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0070

Epoch 00010: val_loss did not improve from 0.00772

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0070 - val_loss: 0.0078

Epoch 11/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0068

Epoch 00011: val_loss did not improve from 0.00772

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0068 - val_loss: 0.0077

Epoch 12/30

1559/1563 [============================>.] - ETA: 0s - loss: 0.0066

Epoch 00012: val_loss did not improve from 0.00772

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0066 - val_loss: 0.0081

Epoch 13/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0064

Epoch 00013: val_loss did not improve from 0.00772

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0064 - val_loss: 0.0078

Epoch 14/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0061

Epoch 00014: ReduceLROnPlateau reducing learning rate to 0.00031622778103685084.

Epoch 00014: val_loss did not improve from 0.00772

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0061 - val_loss: 0.0078

Epoch 15/30

1559/1563 [============================>.] - ETA: 0s - loss: 0.0053

Epoch 00015: val_loss improved from 0.00772 to 0.00750, saving model to C:\Users\ilvna\Portfolio\DS\AE\saved_models\colorized_ae_model.015.h5

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0053 - val_loss: 0.0075

Epoch 16/30

1560/1563 [============================>.] - ETA: 0s - loss: 0.0051

Epoch 00016: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0051 - val_loss: 0.0076

Epoch 17/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0049

Epoch 00017: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0049 - val_loss: 0.0075

Epoch 18/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0048

Epoch 00018: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0048 - val_loss: 0.0077

Epoch 19/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0046

Epoch 00019: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0046 - val_loss: 0.0078

Epoch 20/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0045

Epoch 00020: ReduceLROnPlateau reducing learning rate to 0.00010000000639606199.

Epoch 00020: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0045 - val_loss: 0.0077

Epoch 21/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0042

Epoch 00021: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0042 - val_loss: 0.0077

Epoch 22/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0042

Epoch 00022: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0042 - val_loss: 0.0077

Epoch 23/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0041

Epoch 00023: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0041 - val_loss: 0.0077

Epoch 24/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0041

Epoch 00024: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0041 - val_loss: 0.0077

Epoch 25/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0041

Epoch 00025: ReduceLROnPlateau reducing learning rate to 3.1622778103685084e-05.

Epoch 00025: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0041 - val_loss: 0.0078

Epoch 26/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0040

Epoch 00026: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0040 - val_loss: 0.0078

Epoch 27/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0039- ETA: 0s

Epoch 00027: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0039 - val_loss: 0.0078

Epoch 28/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0039

Epoch 00028: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0039 - val_loss: 0.0079

Epoch 29/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0039

Epoch 00029: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0039 - val_loss: 0.0078

Epoch 30/30

1561/1563 [============================>.] - ETA: 0s - loss: 0.0039

Epoch 00030: ReduceLROnPlateau reducing learning rate to 1.0000000409520217e-05.

Epoch 00030: val_loss did not improve from 0.00750

1563/1563 [==============================] - 15s 10ms/step - loss: 0.0039 - val_loss: 0.0078

<tensorflow.python.keras.callbacks.History at 0x157874899c8>

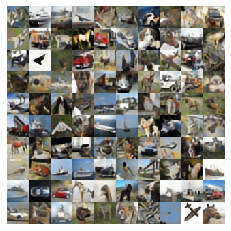

x_decoded = AE.predict(x_test_gray)

imgs_decoded = x_decoded[:100]

imgs_decoded = imgs_decoded.reshape((10, 10, img_rows, img_cols, channels))

imgs_decoded = np.vstack([np.hstack(i) for i in imgs_decoded])

plt.figure()

plt.axis('off')

plt.imshow(imgs_decoded, interpolation='none')

plt.show()

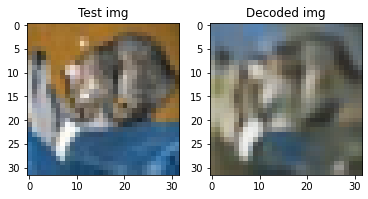

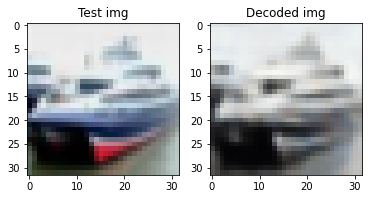

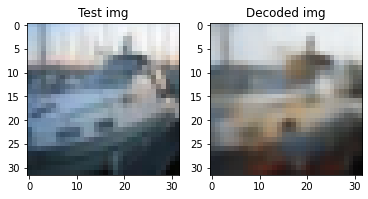

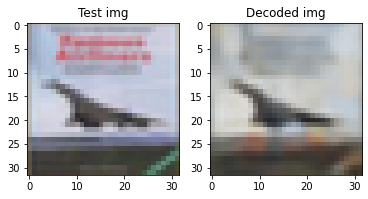

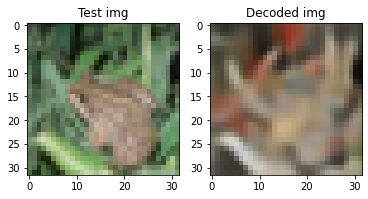

for i in range(5):

plt.subplot(1,2,1)

plt.imshow(x_test[i])

plt.title('Test img')

plt.subplot(1,2,2)

plt.imshow(x_decoded[i])

plt.title('Decoded img')

plt.show()