LSTM Autoencoder

LSTM Autoencoder

LSTM Autoencoder는 sequence data에 Encoder-Decoder LSTM 아키텍처를 적용하여 구현. Input sequence가 순차적으로 들어오게 되고, 마지막 input sequence가 들어온 후 decoder는 input sequence를 재생성하거나 혹은 target sequence에 대한 예측을 출력

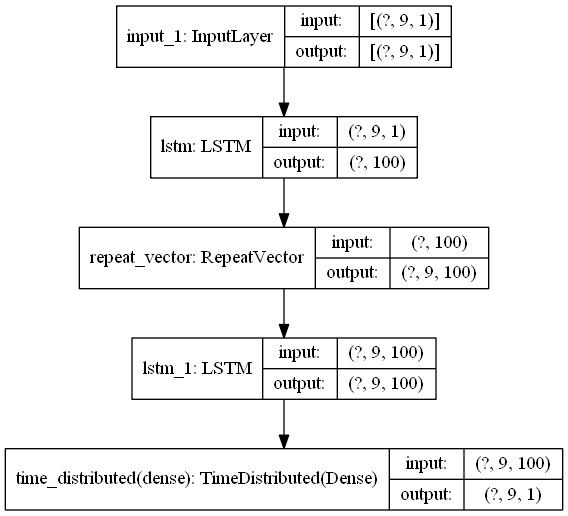

Reconstruction LSTM Autoencoder

재구성(reconstruction)을 위한 Autoencoder. Input과 최대하게 유사한 output을 decoding, LSTM 학습을 위해 데이터를 (sample, timesteps, feature)와 같은 3D형태로 변환.

Imports

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.layers import Input, LSTM, RepeatVector, TimeDistributed, Dense

from tensorflow.keras.models import Model

from tensorflow.keras.utils import plot_model

import warnings

warnings.filterwarnings('ignore')

physical_devices = tf.config.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], enable=True)

sequence = np.array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

num_in = len(sequence)

sequence = sequence.reshape((1, num_in, 1))

Model

inputs = Input(shape = (num_in, 1))

x = LSTM(100, activation = 'relu')(inputs)

x = RepeatVector(num_in)(x)

x = LSTM(100, activation = 'relu', return_sequences=True)(x)

x = TimeDistributed(Dense(1))(x)

model = Model(inputs, x)

WARNING:tensorflow:Layer lstm will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

WARNING:tensorflow:Layer lstm_1 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

model.compile(optimizer='adam', loss='mse')

model.summary()

Model: "functional_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 9, 1)] 0

_________________________________________________________________

lstm (LSTM) (None, 100) 40800

_________________________________________________________________

repeat_vector (RepeatVector) (None, 9, 100) 0

_________________________________________________________________

lstm_1 (LSTM) (None, 9, 100) 80400

_________________________________________________________________

time_distributed (TimeDistri (None, 9, 1) 101

=================================================================

Total params: 121,301

Trainable params: 121,301

Non-trainable params: 0

_________________________________________________________________

plot_model(model, show_shapes=True)

model.fit(sequence, sequence, epochs=300, verbose=0)

<tensorflow.python.keras.callbacks.History at 0x227315da848>

y_hat = model.predict(sequence)

y_hat

array([[[0.11027952],

[0.20542414],

[0.30102822],

[0.3978455 ],

[0.4969649 ],

[0.59912544],

[0.6998908 ],

[0.79965234],

[0.89853156]]], dtype=float32)

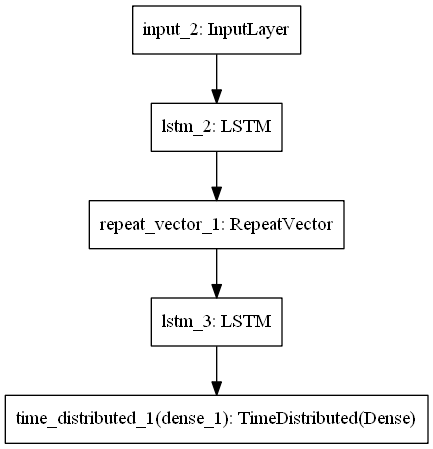

Prediction LSTM Autoencoder

LSTM for time series prediction. Input sequence는 t시점, output은 t+1시점

seq_in = np.array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

n_in = len(seq_in)

seq_in = seq_in.reshape((1, n_in, 1))

seq_in.shape

(1, 9, 1)

seq_out = seq_in[:, 1:, :]

n_out = n_in - 1

seq_out

array([[[0.2],

[0.3],

[0.4],

[0.5],

[0.6],

[0.7],

[0.8],

[0.9]]])

Model

inputs = Input(shape = (n_in, 1))

x = LSTM(100, activation = 'relu')(inputs)

x = RepeatVector(n_out)(x)

x = LSTM(100, activation = 'relu', return_sequences=True)(x)

x = TimeDistributed(Dense(1))(x)

model_p = Model(inputs, x)

WARNING:tensorflow:Layer lstm_2 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

WARNING:tensorflow:Layer lstm_3 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

model_p.summary()

Model: "functional_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 9, 1)] 0

_________________________________________________________________

lstm_2 (LSTM) (None, 100) 40800

_________________________________________________________________

repeat_vector_1 (RepeatVecto (None, 8, 100) 0

_________________________________________________________________

lstm_3 (LSTM) (None, 8, 100) 80400

_________________________________________________________________

time_distributed_1 (TimeDist (None, 8, 1) 101

=================================================================

Total params: 121,301

Trainable params: 121,301

Non-trainable params: 0

_________________________________________________________________

plot_model(model_p)

model_p.compile(optimizer='adam', loss='mse')

model_p.fit(seq_in, seq_out, epochs=300, verbose=0)

<tensorflow.python.keras.callbacks.History at 0x2274f88ca88>

yhat = model_p.predict(seq_in)

print(seq_in)

print(yhat)

[[[0.1]

[0.2]

[0.3]

[0.4]

[0.5]

[0.6]

[0.7]

[0.8]

[0.9]]]

[[[0.1651445 ]

[0.28780752]

[0.40219113]

[0.5096301 ]

[0.6112077 ]

[0.7078306 ]

[0.80026495]

[0.88917077]]]

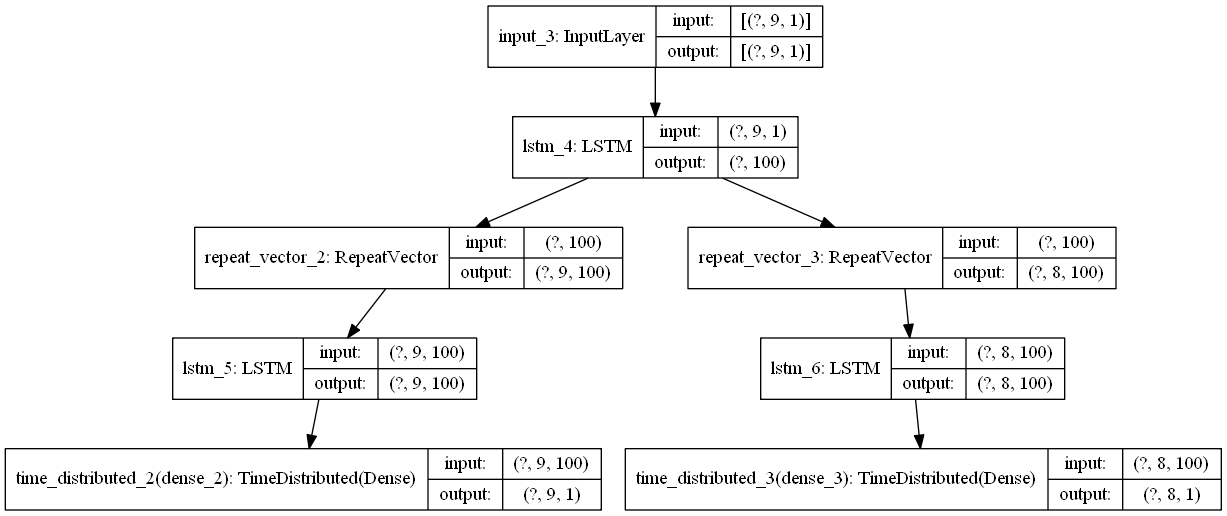

Composite LSTM Autoencoder

Reconstruction + Prediction

seq_in = np.array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

n_in = len(seq_in)

seq_in = seq_in.reshape((1, n_in, 1))

seq_in.shape

seq_out = seq_in[:, 1:, :]

n_out = n_in - 1

Model

inputs = Input(shape=(n_in, 1))

encoder = LSTM(100, activation='relu')(inputs)

#Reconstruction

decoder_1 = RepeatVector(n_in)(encoder)

decoder_1 = LSTM(100, activation='relu', return_sequences=True)(decoder_1)

decoder_1 = TimeDistributed(Dense(1))(decoder_1)

#Prediction

decoder_2 = RepeatVector(n_out)(encoder)

decoder_2 = LSTM(100, activation='relu', return_sequences=True)(decoder_2)

decoder_2 = TimeDistributed(Dense(1))(decoder_2)

model = Model(inputs = inputs, outputs = [decoder_1, decoder_2])

WARNING:tensorflow:Layer lstm_4 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

WARNING:tensorflow:Layer lstm_5 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

WARNING:tensorflow:Layer lstm_6 will not use cuDNN kernel since it doesn't meet the cuDNN kernel criteria. It will use generic GPU kernel as fallback when running on GPU

plot_model(model, show_shapes=True)

model.compile(optimizer='adam', loss='mse')

model.fit(seq_in, [seq_in, seq_out], epochs=300, verbose=0)

<tensorflow.python.keras.callbacks.History at 0x2274f865e88>

yhat = model.predict(seq_in)

yhat

[array([[[0.10464475],

[0.20001109],

[0.29861292],

[0.39890134],

[0.49977863],

[0.60052794],

[0.7007491 ],

[0.8003011 ],

[0.89925253]]], dtype=float32),

array([[[0.16505054],

[0.2889781 ],

[0.40312886],

[0.50964355],

[0.61056316],

[0.7070253 ],

[0.79992574],

[0.8900281 ]]], dtype=float32)]